Radioactivity and X-rays

by Hanna Kurlanda-Witek

The discoveries of X-rays and radioactivity were made at the close of the 19th century and became the cornerstones of modern science, leading to a new way of perceiving the structure of the atom and with it a novel type of physics, known as quantum physics, the solution for the age of the Earth, and a revolution in the treatment of cancer. Radioactivity swiftly changed from a study of glowing chemical elements to the nuclear age, at once linking scientific problems with both politics and ethics. The questions of the safety and benefits of radiation and radioactivity have spilled over into the 21st century. Methods of medical diagnosis and treatment of diseases using X-rays and radioactive isotopes are still being perfected, but the events of the previous century have brought about a near-unanimous disapproval of nuclear warfare and at best a sceptical approach to pursuing nuclear power as the main source of energy after fossil fuels. How was radioactivity discovered? How have we benefitted from applications using ionising radiation? And how much is too much?

Radiation invisible to our eyes

In 1857, the French photographer Abel Niépce de Saint Victor noticed that uranium salts made an imprint on photographic paper when left for a long period of time. He published several papers and wrote to the French Academy of Sciences on the strange occurrence, but few took note of the incident at the time. Almost forty years later, the physicist Henri Becquerel repeated the experiment with the aim of finding out whether uranium sulphate could emit X-rays. Wilhelm Conrad Röntgen had discovered X-rays just several months earlier, in 1895, astounding scientists with the idea that rays could travel through black paper, and even visualise bones in a human body. Becquerel had long been interested in the phosphorescence of materials and was hoping to demonstrate that the uranium salt crystals could emit X-rays after being exposed to sunlight. Indeed, the crystals penetrated the black paper, leaving an image on the photographic plates, but bizarrely, this phenomenon also occurred without exposure to sunlight, as Becquerel had left the plates in a drawer for several days. It was the experiment that had rocked the world, as Frederick Soddy explained at the 2nd Lindau Nobel Laureate Meeting in 1952, but for the following two years remained “a curiosity of science”, until Pierre Curie suggested to his new wife Marie that she should study the “Becquerel rays” for her PhD project. The Curies used an electrometer to measure the weak electric current coming from uranium radiation, and these meticulous experiments gave them the advantage of being able to compare the levels of radiation between different samples. They found that thorium was also radioactive, and very shortly afterwards, discovered two new radioactive elements, polonium and radium. Frederick Soddy mentioned these amazing discoveries during his lecture in Lindau in 1952. The Curies’ daughter, Irène Joliot-Curie, herself a Nobel Laureate, was present in the audience:

(00:24:39 - 00:27:55)

The Curies and Becquerel jointly received the Nobel Prize in Physics for their discoveries in 1902, and in 1911, Marie Curie obtained another Nobel Prize, this time in Chemistry, for the discovery of radium and polonium. The findings greatly puzzled the scientific community; how could these elements spontaneously emanate energy on their own, without changing their external features? This was, as Soddy called it, “a new heterogeneity in matter”. Marie Curie admitted in a paper for the Revue Scientifique in 1900, that radioactivity abolishes the laws of chemistry that were then known. At around the same time, at McGill University in Montreal, Ernest Rutherford, a physicist from New Zealand, and Frederick Soddy, a chemist from England, set to work to unearth the cause and nature of radioactivity. Rutherford had already succeeded in characterising two types of radiation – the easily-absorbed, stable alpha-rays (now known to consist of two protons and two neutrons) and penetrating, short-lived beta-rays (electrons). The deeper penetrating gamma-rays, photons produced by the nucleus, were characterised by Paul Villard in 1900. Rutherford was particularly bewildered by the fact that radioactive elements were exuding types of radioactive gas, which he called “emanations”.

Experiments by Rutherford and Soddy performed during their eighteen-month collaboration showed that radioactivity is not a singular event. Radioactive elements spontaneously transform into other elements with different characteristics, which in turn transform into still other elements; a type of cascade, during which radiation is produced. Additionally, the time it takes for half of the atoms of a radioactive element to decay is constant and characteristic of that element. This property became known as the element’s half-life. Rutherford realised that radioactive decay of elements could be used as a type of clock, which could calculate the age of the Earth, yet another scientific riddle that could not be solved by generations of scientists.

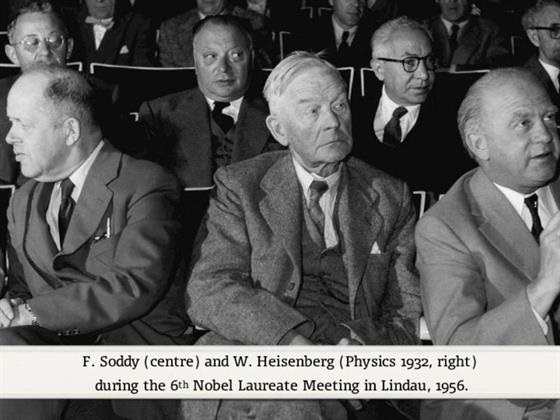

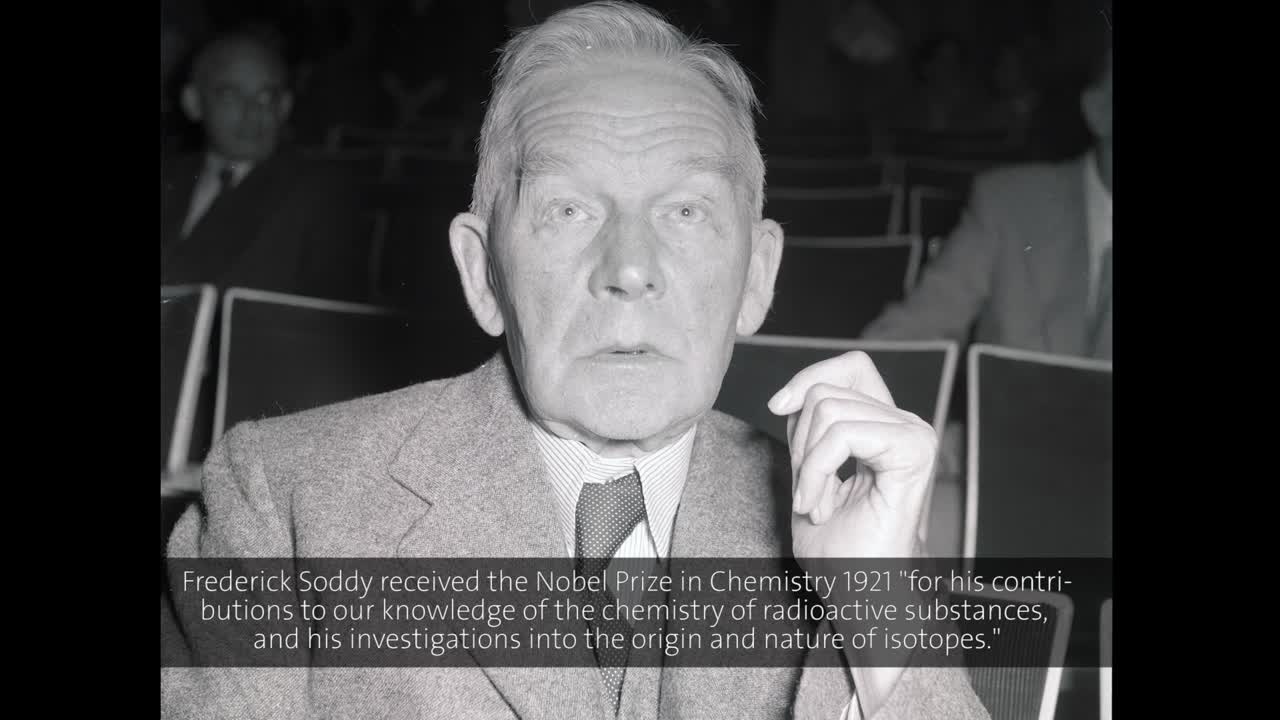

Rutherford and Soddy were very careful when publishing the results of their work. This was more than a curious phenomenon. The transformation of matter seemed almost magical, and they did not want to be regarded as alchemists. However, both Rutherford and Soddy were awarded the Nobel Prize in Chemistry – Rutherford in 1908, “for his investigations into the disintegration of the elements, and the chemistry of radioactive substances”, and Soddy in 1921, “for his contributions to our knowledge of the chemistry of radioactive substances, and his investigations into the origin and nature of isotopes”. In the ten years following his work in Montreal, Soddy concentrated his research on how to incorporate the rising number of new chemical elements into the long-established periodic table. By 1913, Soddy had concluded that the new series of radioactive elements were not so much new elements as different forms of the same element, with identical chemical properties but different atomic weights. He called these forms “isotopes”, from the Greek words isos (equal) and tópos (place), as chemical isotopes have the same place in the periodic table, but are characterised by a different mass and half-life. During his lecture in Lindau in 1954, Soddy called isotopes “one of nature’s best-kept secrets”.

The neutral subatomic particle

In 1910, Rutherford, having already received his Nobel Prize, made yet another extraordinary discovery. He and his assistants Hans Geiger and Ernest Marsden conducted an experiment, where alpha particles were thrown at a piece of gold foil. Astonishingly, approximately 1 in 20 000 of the alpha particles were deflected from the foil – these particles were clearly hitting something dense at the core of the gold atoms. Rutherford concluded that the atom has a small, positively-charged nucleus, which is covered in a cloud of spinning, negatively-charged electrons. By 1920, Rutherford put forward the idea that the nucleus consists of a neutral particle, with no electric charge, probably formed from a proton and electron. However, it took several years before experiments could determine the presence of a neutral particle. Studies were carried out by Walter Bothe and Herbert Becker, where beryllium was hit with alpha particles, which caused its’ disintegration and radiation. Irène and Frédéric Joliot-Curie repeated the experiment, but directed the radiation at a paraffin wax sample, which ejected what they thought were high-energy protons from hydrogen atoms. James Chadwick, a physicist who had worked with Rutherford for many years, realised that these high-energy particles must be heavy neutral particles ejected from the nucleus. In 1932, after only two weeks of experiments, Chadwick submitted the paper, “The Possible Existence of a Neutron”, which was followed by the paper, “The Existence of a Neutron” a few months later. Chadwick received the Nobel Prize in Physics for the discovery of the neutron in 1935. This soon proved to be more than the identification of another subatomic particle. Scientists in the United States, Great Britain, France, Germany, and Italy were intensely searching for ways to release large amounts of energy by penetrating atomic nuclei with the heavy neutron. The frenzy in experimental nuclear physics developed in parallel with political unrest in Europe. In 1933, Leo Szilard patented the idea of a nuclear chain reaction, where nuclei bombarded by a neutron could produce two or more neutrons. The turning point was when Otto Hahn and Lise Meitner described nuclear fission of uranium, whereby the neutron is absorbed by the heavy nucleus of uranium (235U), which turns into an excited state (236U) and then splits into two isotopes of barium and krypton, ejecting neutrons and a large amount of kinetic energy. These findings greatly alarmed nuclear physicists in the United States, particularly Leo Szilard, Enrico Fermi and Albert Einstein, who decided to warn President Roosevelt himself of the possibility of the use of nuclear weapons in the near future. This led to the establishment of the Uranium Committee, which eventually became the Manhattan Project in 1942. At a recent meeting in Lindau, Nobel Laureate Roy J. Glauber explained nuclear fission in the introduction of his lecture:

(00:00:27 - 00:06:56)

“It’s a terrible thing that we made” – Robert R. Wilson, as quoted by Richard P. Feynman

The Manhattan Project brought together talented physicists from all over the country with the aim of constructing an atomic bomb. The headquarters of the top-secret project were in “an unstated and distant location”, as Glauber writes in the abstract of his talk; Los Alamos, New Mexico. Many of those working on the science behind the project were present or future Nobel Laureates. In this lecture fragment, Glauber describes the Trinity test, the first nuclear weapon ever detonated:

(00:41:20 - 00:45:37)

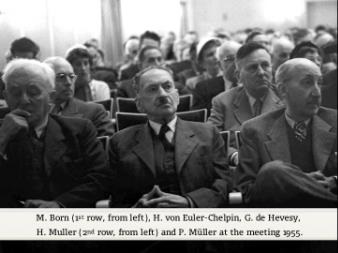

Only three weeks after this first explosion of an atomic bomb, the bombings of Hiroshima and Nagasaki took place, quickly leading to the end of the war. Despite the joy felt by all that the war was over, many of the scientists who took part in the Manhattan Project regretted the use of nuclear warfare. The research that they had carried out before the war had not only opened the doors to “big science”, but laid an enormous responsibility on their shoulders. Several scientists directly involved in making the bomb set up The Association for Los Alamos Scientists, and stated in their manifesto, “The object of this organization is to promote attainment and use of scientific technological advances in the best interests of humanity.” Not only did some scientists become as recognisable as celebrities in the post-war period, most became advocates for social responsibility and the total abolishment of nuclear weapons. On July 15th, 1955, a decade after the Trinity test, 18 Nobel Laureates signed the Mainau Declaration at the 5th Lindau Nobel Laureate Meeting. One of the sentences reads as follows, “By total military use of weapons feasible today, the earth can be contaminated with radioactivity to such an extent that whole peoples can be annihilated. Neutrals may die thus as well as belligerents.”

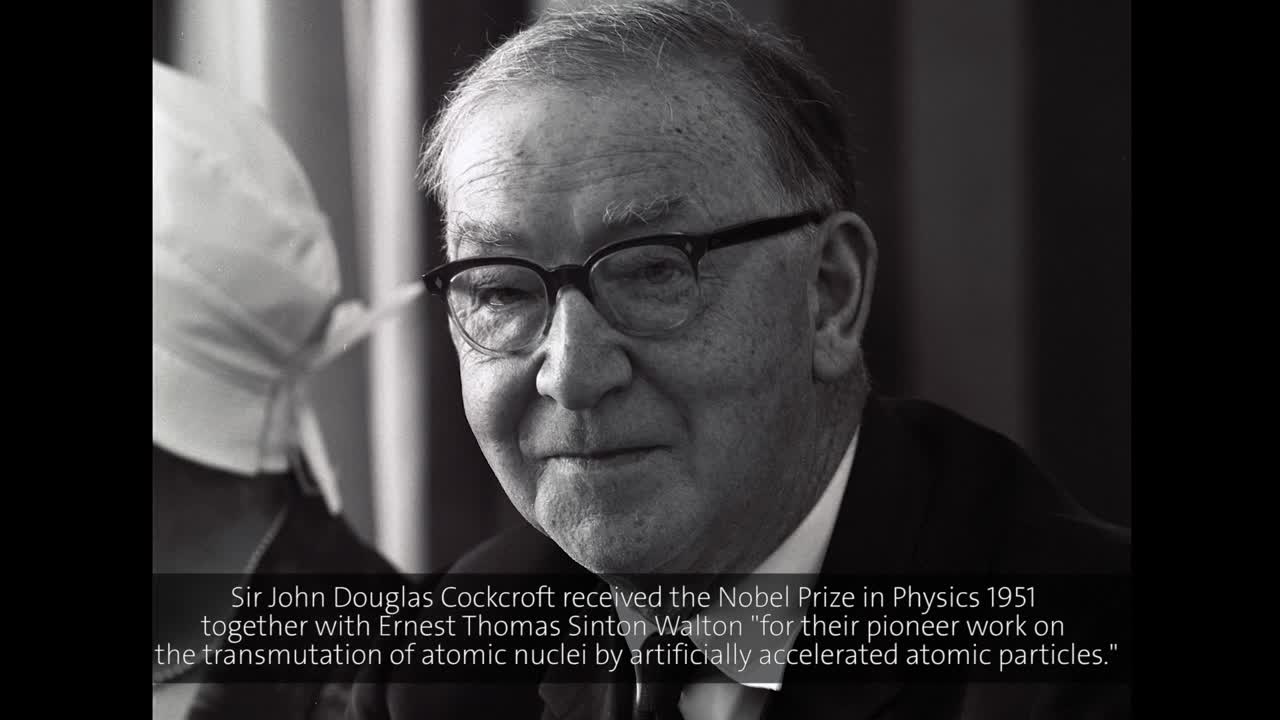

But the huge amounts of energy released in nuclear fission could also be put to good use, and with the end of the war, many physicists were diverting their attention towards developing the technology for nuclear power. Sir John Cockcroft, who, along with Ernest Walton, received the Nobel Prize in Physics in 1951 for splitting the atom, was a firm believer in nuclear power as the energy source of the future. Cockcroft became the director of Britain’s first Atomic Energy Research Establishment at Harwell. During his lecture in Lindau in 1962, he stressed that “nuclear power will be essential by the end of the century”:

(00:00:42 - 00:02:52)

Although nuclear power prospered for three decades after the war, the mental link to nuclear weapons as well as risk of radiation leakage caused widespread concern. Several nuclear reactor accidents, such as the Three Mile Island accident in the United States in 1979, crowned with the Chernobyl disaster in 1986 caused nuclear power to fall out of favour in most industrialised countries. Large costs associated with power plant construction and operation, including the handling and storage of radioactive waste further cemented anti-nuclear viewpoints. Today, there are about 450 nuclear power plants in operation, producing approximately 11% of the world’s electricity, yet many industrialised countries are considering the decommissioning or phasing out of their nuclear power plants. The future for nuclear power may lie with countries in the Middle East, Asia and South America; the United Arab Emirates and Turkey are currently building new nuclear power plants. The impending deficit of conventional fossil fuels, particularly oil, may reignite interest in innovative nuclear energy research and its potential use, despite the perceived risks.

Liquid sunshine and the battle with cancer

The birth of radioactivity marked a new era in medical research. Perceived as a miracle drug, radium quickly moved from laboratory to hospital when it was found that put on or next to a tumour, the radium would cause it to shrink. We now know that ionising radiation damages cancer cells by making many breaks in their DNA, disenabling the DNA repair system.

The discoverers of radioactivity were keen to test the miraculous properties of radium on themselves; after Becquerel developed a burn from carrying a tube of radium in his coat pocket for two weeks, Pierre Curie fixed a sample of radium on his arm for up to ten hours a day and reported the appearance of a scar after 52 days. The uncanny luminous crystals fascinated everyone. The Curies would display glow-in-the-dark test tubes at dinner parties to the delight of friends. Marie Curie even kept a sprinkling of glowing radium salts at her bedside. They did not realise that this ongoing exposure to radioactive elements was what was making them ill – so much so, that they declined to travel to Stockholm to accept their Nobel Prize, blaming their malaise on overall exhaustion from working long hours in dire lab conditions. For decades following the discovery of radioactivity, radioactive elements were added to cosmetics and medicine, or used in paints to make luminous dials on watches, to note one famous example. Many of the workers in the Curies’ lab, as well as Marie Curie herself, and her daughter and son-in-law, the Joliot-Curies, would die of leukemia as a result of years of work around radioactive material. Yet at the same time, radiation therapy for cancer treatment was developed at a relatively brisk pace.

The source of radium is uraninite, formerly known as pitchblende. At the beginning of the 20th century, uraninite quickly became a much sought-after mineral, but was only mined in what was then Joachimsthal, in today’s Czech Republic. The discovery of uranium ores in Colorado in the U.S. in 1913 galvanized the use of radium in cancer therapy. By 1926, the New York Cancer Hospital, now known as the Memorial Sloan Kettering Cancer Centre, possessed 9 grams of radium, more than anywhere else in the world at the time. Gioacchino Failla, who obtained his PhD under the tutelage of Marie Curie in Paris, became the hospital’s Director of Medical Physics and made several marked advances in radiotherapy. The groundwork for much of these first standardised treatments was carried out at the Radium Institute in France. It was here that fractionated radiotherapy, the use of continuous low-dose treatment, as opposed to short, intense treatments, was established.

The two main constituents of radiotherapy are external beam radiotherapy (EBRT) or teletherapy, where X-rays, or gamma-rays produced by a radioactive element, are delivered to the patient from an external source; and internal radiotherapy, or brachytherapy, where the source is placed inside the patient’s body. A basic form of EBRT was placing radium inside a lead box, which was then directed over the patient. Failla was one of the first radiooncologists to use gold tubes filled with radon gas produced by radium, as an early method of brachytherapy. Gold had the advantage over glass that it kept beta-rays from harming the patient’s tissues, but allowed gamma-rays to pass through. The gold tubes were then cut into small pieces, known as seeds, which could be inserted near the cancerous tissue, a practice known as seed implantation, which is still used today. Starting from the 1930s, radium teletherapy and the use of radon seeds in brachytherapy began to be replaced by artificial radioactive isotopes.

The beginnings of nuclear medicine

In 1934, the Joliot-Curies demonstrated that radioactivity was not only limited to natural elements, but was possible to produce artificially. In a series of experiments, they bombarded aluminium with alpha particles from a radium-beryllium source and observed continuous radiation. The alpha particles caused the expulsion of a neutron, creating an isotope of phosphorus. When the Joliot-Curies obtained the Nobel Prize in Chemistry in 1935, “in recognition of their synthesis of new radioactive elements”, they were already aware of the significance of their findings. Frédéric Joliot stated in his Nobel lecture, “(...) large quantities of radio-elements will be required. This will probably become a practical application in medicine.” The age of purifying several grams of radioactive elements from large quantities of minerals was coming to an end.

However, it is George de Hevesy who is distinguished with the title, “the father of nuclear medicine”. Prior to the Joliot-Curies’ work on creating artificial radioactive elements, the Hungarian-born Hevesy, who was then working in Rutherford’s lab in Manchester, came up with the idea that a radioactive substance could be used as an indicator for stable elements. He pursued this notion by studying the solubility of lead and the presence of radium-D, now known to be an isotope of lead, which was physically and chemically inseparable from the stable lead. These studies made way for the use of radioactive tracers in live organisms. Hevesy examined the absorption and distribution of radioactive lead (212Pb) in the broad bean plant, which was followed by a study of radioactive bismuth (210Bi) injected into the muscles of rabbits, a noteworthy step in medical research. Once the Joliot-Curies published their findings on the synthetic formation of an isotope of phosphorus (32P), Hevesy and physician Ole Chievitz successfully used this isotope to monitor its circulation and uptake by animal bones. Hevesy was awarded the Nobel Prize in Chemistry in 1943. Soddy mentioned the importance of radioactive tracers in medicine in Lindau in 1954, noting that, like X-rays, tracers provide “a second sight” to the surgeon:

(00:16:47 - 00:17:28)

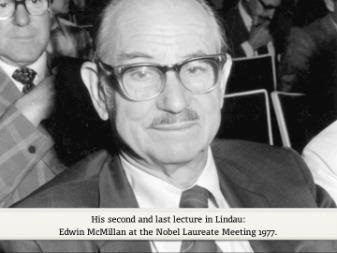

A significant development in the use of artificial radionuclides came with the construction of the first cyclotron by Ernest Lawrence (Nobel Prize in Physics, 1939) at the University of California in Berkeley. The high-voltage accelerator uses electrical and magnetic fields to greatly increase the speed of protons, which assures a greater probability that bombardment of an atomic nucleus will trigger a nuclear reaction. Upon completion of the first nuclear reactor by Fermi and his team in Chicago in 1942, the commercial use of radionuclides in medical research gained speed. Radioisotopes such as sodium-24 and iodine-131 became standards in diagnostic research, while cobalt-60 and cesium-137 replaced radium and radon gas in cancer radiotherapy. Lawrence’s brother, John Lawrence, perfected the use of radioactive tracers at the Lawrence Berkeley National Laboratory and made other breakthroughs in nuclear medicine with the use of the cyclotron, as recollected by Nobel Laureate Edwin McMillan during his lecture in Lindau in 1977:

(00:23:24 - 00:24:38)

X-rays : clinical diagnosis and radiotherapy

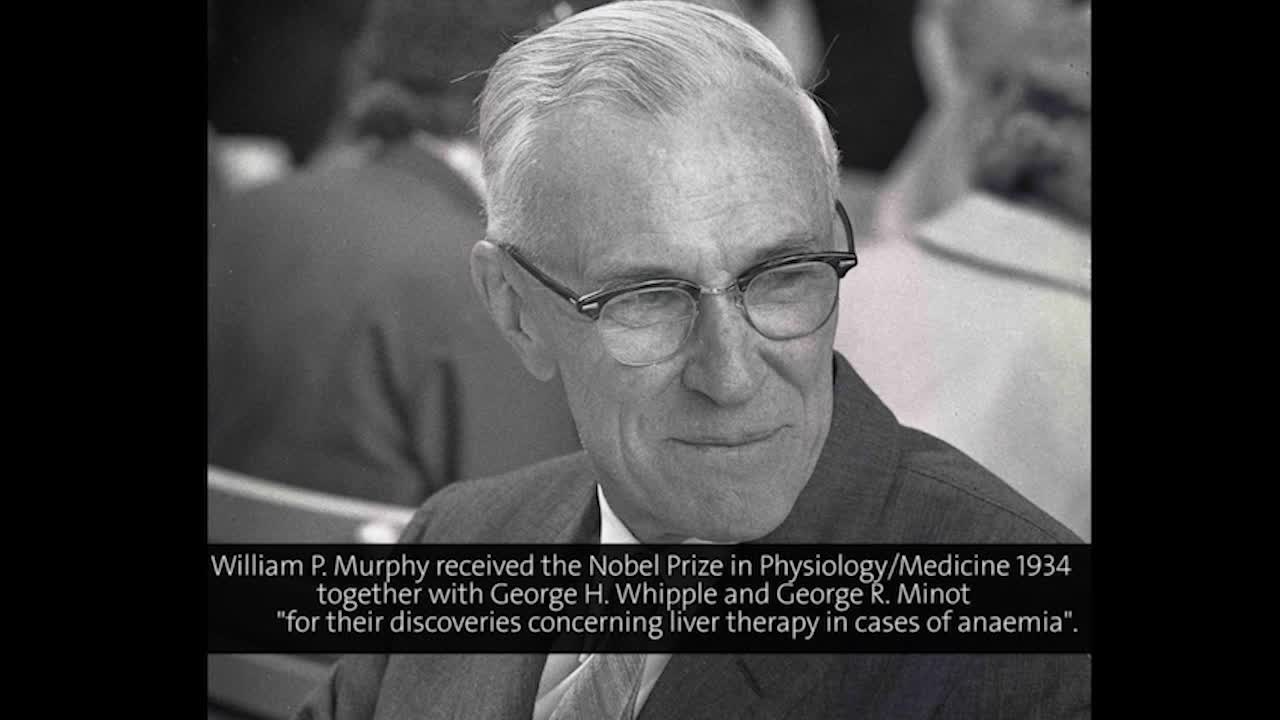

Röntgen’s “X-ray photograph” of his wife’s hand was the first example of projectional radiography, and was understandably welcomed by the medical community. It could now be possible to see broken bones, and locate foreign bodies such as bullets and shrapnel. Radiation therapy using X-rays was also put into practice remarkably soon after their discovery. The first medical uses were for health conditions such as eczema and lupus, and the first cancer treatment using X-rays was reported in France in 1896. The drawback of these early treatments was the large damage done to normal tissue due to the low energies of the X-rays. Patients as well as doctors suffered from burns and deformities, and deaths as a result of radiation sickness frequently occurred in the first decades of X-ray use. Fortunately, the initial problems of low penetration of X-rays were largely overcome by the middle of the 20th century. Projectional radiographs became a mainstay of medical imaging. In radiation therapy, the development of compact linear accelerators that were able to rotate around patients boosted survival rates for cancer patients. As with radioisotope therapy, it took decades to figure out the appropriate dosage rates for various malignancies and how to maximise the efficacy of treatment. During the 10th Lindau Nobel Laureate Meeting, physician and Nobel Laureate William Parry Murphy explained the modern-day method of treating leukemia using X-rays:

(00:07:33 - 00:09:02)

In the next lecture fragment, Murphy disapproves of the use of chemotherapy to treat chronic leukemia, but the rapid development of chemotherapy drugs in the second half of the 20th century has replaced X-rays as the standard routine for treating this disease, despite the fact that the side effects mentioned by Murphy still remain a significant problem for many cancer patients.

(00:28:11 - 00:29:53)

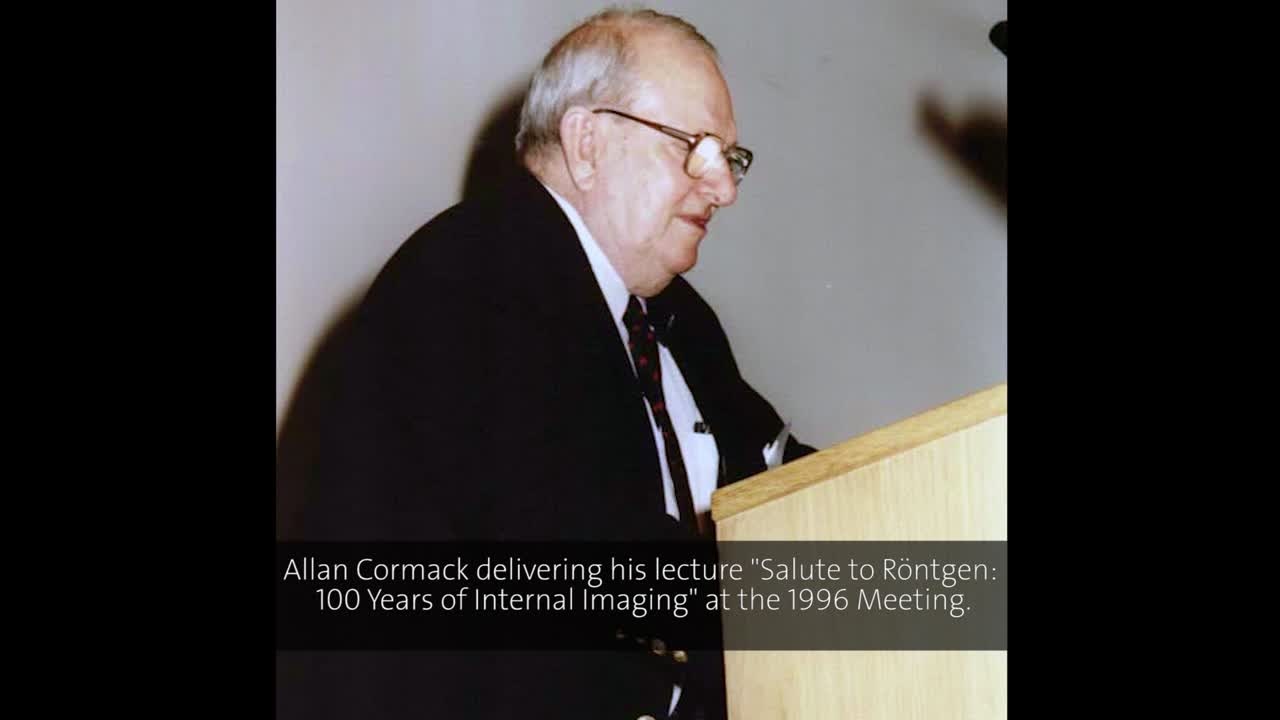

X-rays are now used more often as a powerful diagnostic tool than as a means of killing tumours. Soon after Myrphy’s lecture in Lindau, Allan McLeod Cormack published two papers on the mathematics of emission scanning using X-rays, which could greatly improve radiotherapy. As the only nuclear physicist in Cape Town in the mid-1950s, Cormack was asked to work part-time in the radiology department of a local hospital, and was struck by the fact that the planned radiotherapies directed X-rays at patients as if they were homogeneous, such as “a block of wood or a tank of water”, to quote Cormack, without consideration of the various absorptions of human tissues. He set out to solve the problem, but his theoretical solutions aroused almost no interest. In 1971, Cormack learned of the EMI-scanner, the first computed tomography machine, developed by Godfrey Hounsfield:

(00:14:37 - 00:17:00)

Cormack and Hounsfield were jointly awarded the Nobel Prize in Physiology or Medicine in 1979 for their independent work on “the development of computer assisted tomography”, and over time, the EMI-scanner became today’s computed tomography (CT) scanner, which enables physicians and radiologists to visualise cross-sections of internal organs or particular tissues, also in three dimensions. CT has become a fundamental part of medical research and clinical diagnosis. But as noted in Cormack’s lecture, it can be used in many other disciplines, such as archaeology, geology, or materials science. Beyond CT and medical research, X-ray technology is now used to observe outer space through X-ray astronomy, to study molecular structure, or in material processing technologies, to name just a few applications.

The question of the safety of radioactivity

The multitude of discoveries concerning radiation and radioactivity made an enormous impact on physics, chemistry, biology and medicine. New scientific fields emerged and promising technologies were introduced, yet the use of nuclear weapons during World War II and the contamination resulting from nuclear testing prompted scientists to rethink attitudes towards peacetime uses of radiation as well. At the time, limiting unnecessary exposure to radiation by patients and doctors was not a priority. Since the 1920s, shoe shops in Britain and the U.S. were endowed with shoe-fitting fluoroscopes, which enabled customers to view x-ray images of their feet in order to choose the right shoe size. This invention was particularly directed at children. As the field of genetics began to take shape, many began to ask what was the impact of radiation dosage on human DNA. It was in this atmosphere that Hermann Joseph Muller (Nobel Prize in Physiology and Medicine, 1946) voiced his fears concerning genetic damage in the expected age of radiation, “I cannot find justification for the large doses received in medical irradiation.”

(00:40:43 - 00:47:46)

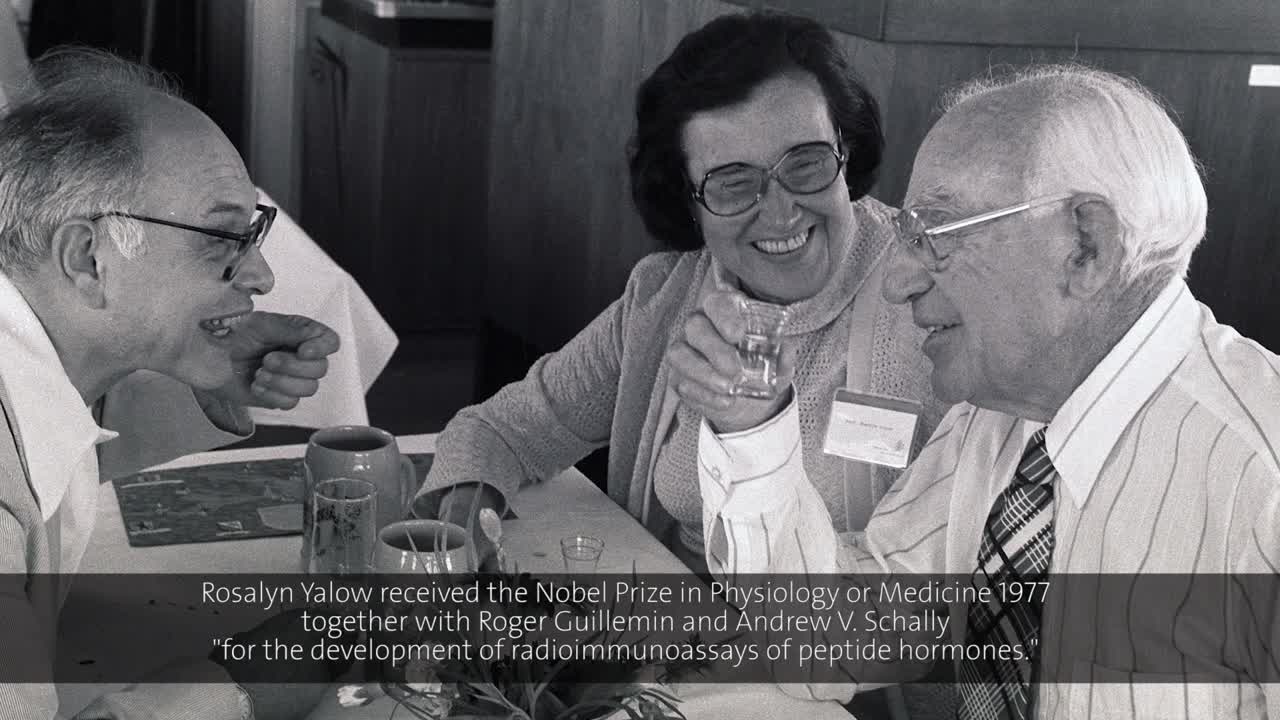

Nearly thirty years later, nuclear physicist and Nobel Laureate Rosalyn Yalow expressed a totally different view, blaming the public’s irrational fear of radiation at any level on headlines that never caught up with the facts:

(00:29:32 - 00:33:35)

The current attitude to radiation and radioactivity is still laced with fear, particularly regarding nuclear power plant disasters, protection against natural UV radiation from the sun and leakage of radioactive waste. The use of radiation in medicine is supported by the term ALARA, an acronym for “As Low As Reasonably Achievable”. The benefits of modern-day life-saving medical procedures greatly outweigh the small risks of radiation use. In these respects, the role of scientists as effective communicators is considerable. “As scientists we must be prepared to discuss with the public why we cannot survive in a no-risk society.” Yalow expressed this sentiment in Lindau over three decades ago, and it is still relevant today.

Footnotes

https://assets.nobelprize.org/uploads/2018/06/chadwick-lecture.pdf?_ga=2.61450858.794804564.1536232660-344762665.1535026955

http://chandra.harvard.edu/tech/

http://science.sciencemag.org/content/102/2659/608.2

https://www.aps.org/publications/apsnews/200803/physicshistory.cfm

https://www.aps.org/publications/apsnews/200705/physicshistory.cfm

https://www.europhysicsnews.org/articles/epn/pdf/2011/05/epn2011425p18.pdf

https://www.healio.com/hematology-oncology/news/print/hemonc-today/%7B2df0dfa1-ce57-490a-8bea-6533f4a89f84%7D/the-shoe-fitting-fluoroscope-a-little-known-application-of-the-x-ray

https://www.lindau-nobel.org/enrico-fermi-and-the-dawn-of-the-nuclear-age/

https://www.lindau-nobel.org/roy-glauber-and-his-time-in-los-alamos/

https://www.lindau-nobel.org/the-manhattan-project-life-in-los-alamos/

https://www.mediatheque.lindau-nobel.org/research-profile/laureate-soddy#page=all

https://www.mskcc.org/blog/hot-times-radium-hospital-history-radium-therapy-msk

https://www.nobelprize.org/prizes/chemistry/1935/joliot-curie/facts/

https://www.nobelprize.org/prizes/physics/1939/lawrence/facts/

https://www.nobelprize.org/prizes/themes/marie-and-pierre-curie-and-the-discovery-of-polonium-and-radium/

https://www.nytimes.com/1998/10/06/science/a-glow-in-the-dark-and-a-lesson-in-scientific-peril.html

http://www.world-nuclear.org/information-library/current-and-future-generation/nuclear-power-in-the-world-today.aspx

Bryson, B. (2003) A Short History of Nearly Everything. Doubleday.

Carlsson, S. (1995) A Glance at the History of Nuclear Medicine. Acta Oncologica 34 (8), 1095-1102.

Connell, P.P., Hellman, S. (2009) Advances in Radiotherapy and Implications for the Next Century: A Historical Perspective. Cancer Research 69 (2).

Fernandez, B. (2013) Unravelling the Mystery of the Atomic Nucleus. A Sixty Year Journey 1896 – 1956. English version by Georges Ripka. Springer Science + Business Media, New York, NY.

Feynman, R.P. and Leighton, R. (1985) Surely You’re Joking Mr Feynman! Vintage, London.

Jaccard, M. (2005) Sustainable Fossil Fuels: The Unusual Suspect in the Quest for Clean and Enduring Energy, pp.101-111. Cambridge University Press, New York, NY.

Rogers, J.D. (2013) The Neutron’s Discovery – 80 Years On. Physics Procedia 43, 1-9.