Imaging in Science

by Hanna Kurlanda-Witek

A coastal landscape on our desktop, photographs of loved ones in our wallet, images of world events beneath the headlines…photographs surround us, signalling a range of emotions to our brains. With the rise of smartphones, photos have become even more ubiquitous, with the average phone user storing over 400 images. We take these increasing amounts of photographs to keep something in our memory; the bus schedule at a country bus stop, price codes of items we’d like to buy, or simply to remind ourselves of that summer picnic during a rainy week in November. The revolution that photography has undergone in only a few decades is often underestimated. At his last lecture at Lindau in 2015, the late Sir Harold Kroto gave a brief course on how to take a photograph:

(00:17:31 - 00:18:46)

With the growth of imaging technology, there is little wonder that many scientific fields are placing more and more emphasis on up-to-date imaging. This is the most powerful type of data – one that speaks to vision, the strongest human sense.

In the past several decades, images in science have allowed us to see, and therefore to discover and learn about, fundamental processes in diverse fields, from the medical sciences to earth and life sciences. We are now able to observe black holes in the Milky Way with the use of telescopes orbiting in space. We can visualise the detrimental effects of a stroke on the human brain. We are even able to discern ribosomes in cells. The physical sciences are at the core of these breakthroughs, allowing astronomers, doctors, radiologists and biologists to see objects at a closer range or to visualise the unseen or obscure.

“To the Top of the Atmosphere and Beyond” – Visualising the Cosmos

In his book on the history of telescopes, Geoff Andersen writes that, in sufficient darkness, we are able to witness up to 2000 stars in a single night using our eyes alone (2001 if you count the rise of the Sun). Our eyes are powerful tools for scanning the night sky and astronomical occurrences have absorbed cultures since ancient times, which resulted in written descriptions and drawings of moons and stars on stone walls, long before many earthly natural phenomena were explained.

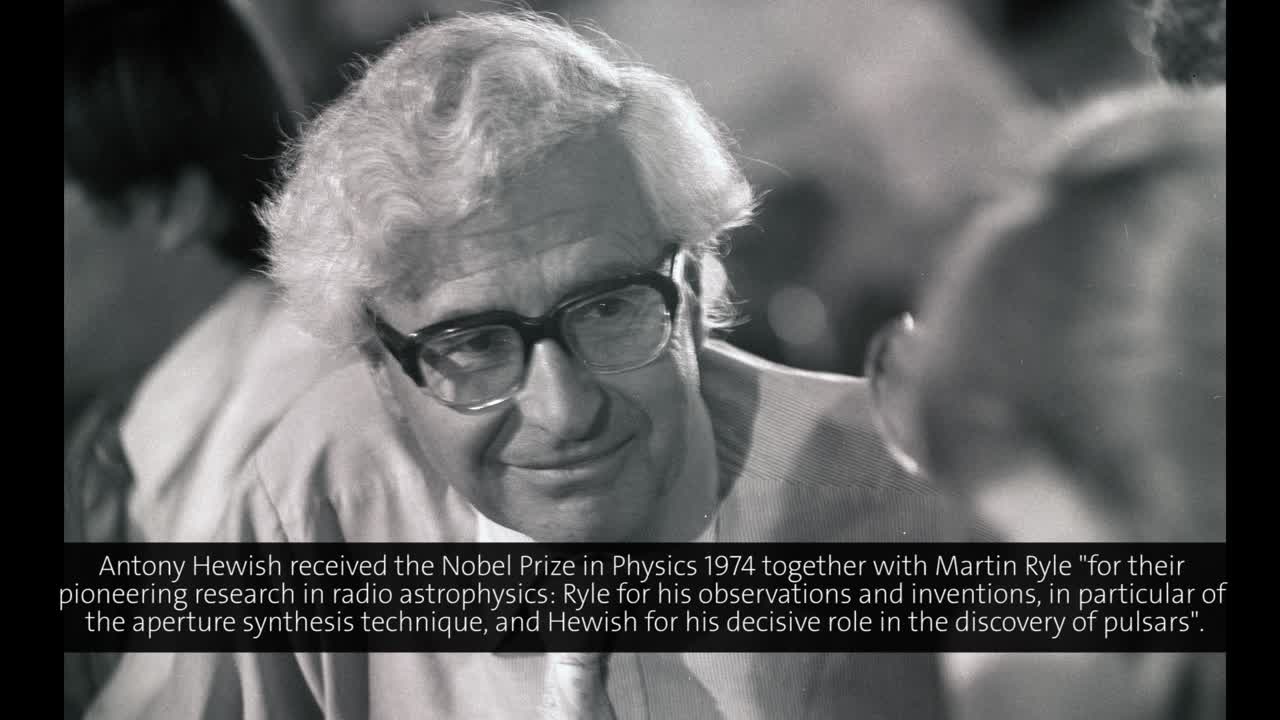

During the 41st Lindau meeting, Physics Nobel Laureate Antony Hewish described a photo of a pulsar, or type of neutron star, in the Crab Nebula, which is what remains from a supernova, an explosion marking the death of a large star.

(00:11:39 - 00:13:24)

As Hewish explained, the supernova was observed by Chinese and Arab astronomers in the year 1054; the Chinese referred to it as a “guest star”, which was visible even in daylight for 23 days. Over the centuries, astronomy became the base of scientific thought and an attractive hobby for learned noblemen. Here is Riccardo Giacconi’s account of the unusual 16th century astronomer, Tycho Brahe, who set the stage for the later works of Kepler and Newton:

(00:16:32 - 00:20:35)

“The telescope was the first physical apparatus that extended human perception beyond natural limits”, wrote Michael Marett-Crosby, and the astronomical progress of the 20th century has magnified that perception to an enormous scale. The Hooker telescope, a 2.5-metre optical telescope, built at the Mount Wilson Observatory in California in 1917, was the world’s largest telescope for over 30 years, and gave astronomers an unprecedented view of celestial bodies. In 1920, Albert Michelson and Francis Pease used the Hooker telescope to determine the size of a star. Edwin Hubble obtained hundreds of photographs with the telescope to demonstrate that nebula were in fact remote galaxies, which was presented in his famous paper, “Cepheids in Spiral Nebulae”. These observations led Hubble to find that most galaxies are moving away from each other, and hence that the universe is expanding.

The Earth is peppered with astronomical observatories, and while telescopes are becoming increasingly larger and technologically advanced, there is always the barrier of the Earth’s atmosphere that must be overcome, particularly with respect to optical telescopes. With the advent of space exploration in the 1960’s, the idea emerged to send telescopes into space. In 1968, the orbiting astronomical observatory OAO-2, or Stargazer, was the first successfully launched telescope, which collected ultraviolet data in the Earth’s orbit. Today, it is common to compare data from different telescopes, which detect a certain portion of the electromagnetic spectrum; radio, infrared, visible, ultraviolet, X-ray or gamma-ray telescopes. During his lecture, “Seeing Farther with New Telescopes”, the 2006 Physics Nobel Laureate John Mather showed the difference in images taken with the optical and infrared capabilities of the Hubble telescope:

(00:18:54 - 00:20:24)

In October 2018, the primarily infrared James Webb Space Telescope (JWST) is scheduled for launch from French Guiana. This telescope will be the successor to the Hubble telescope (launched in 1990); it is longer (22 metres in length versus the Hubble’s 13.2 metres) and has a primary mirror that has a collecting area that is 7 times larger than that of the Hubble telescope. The JWST will be placed 1.5 million kilometres away, where it is hoped to register data of the first galaxies of the universe. In this lecture snippet, John Mather presents a film of how the JWST will unfold into operating mode:

(00:20:25 - 00:22:50)

Despite the current reluctance of sending humans into space, our curiosity of the cosmos has not waned, and the “microscopes of the sky”, as Nobel Laureate Charles Townes called telescopes in 1987, will continue to transfer visual information on what is happening in the universe. Paradoxically, by disentangling these astronomical mysteries, we are able to find out more about our own planet.

“New Kind of Invisible Light” – Pioneers in Medical Imaging

Wilhelm Röntgen’s wife Anna Bertha exclaimed, “I have seen my death!” when she saw the X-ray image of her hand that her husband had taken of her. This was essentially the first X-ray radiogram, and the discovery of X-rays won Röntgen the first Nobel Prize in Physics in 1901. It certainly must have left the young Anna Bertha aghast to see the clearly delineated bone structure of her hand, along with the ring she wore, yet over a century later, many of us are willing to wait hours in hospital emergency rooms to locate the source of our pain with X-rays. Even if we’ve never seen X-ray images of ourselves at the hospital or dentist’s office, it is certain that our hand luggage has been subject to X-rays at an airport. Röntgen’s experiments gave rise to the magical ability of visualising the internal form of objects.

The first steps in developing the science underpinning the two main technologies used today for medical imaging – computed tomography (CT) and magnetic resonance imaging (MRI) - were taken soon after World War II. In CT, X-rays pass through an object, and the signal, which results from different attenuation of the beam by the object, is registered by a detector. Attenuation is dependent on the density of the object material; for example, in medical imaging, bones will attenuate more than muscles, which is why they have a sharper contrast in an X-ray image. X-rays are polychromatic beams and contain a spectrum of photon energies. When photons interact with the atoms of the imaged material, the interaction with electrons causes the photons to slow down, and new photons are ejected as a result. This emission of electromagnetic radiation of a range of energies is known as bremsstrahlung. The detector receives the photons and emits light, which is then converted to an electric signal and then digitally processed. When many X-ray images are taken from various angles of an object, the resulting images are effectively cross-sections of the object, and the more images taken at every angle, the better the reconstructed image. This technique was developed independently by Allan Cormack and Godfrey Hounsfield. During his last lecture in Lindau in 1997, Cormack, a nuclear physicist from Cape Town, South Africa, explained how he had encountered the main problem in X-ray imaging:

(00:06:45 - 00:09:23)

Image reconstruction is derived using analytical methods, based on the Radon transform, which was calculated by Johann Radon in 1917. Here, Cormack describes the mathematics of the Radon transform in Lindau in 1984:

(00:03:20 - 00:04:51)

Cormack published his findings in two papers in 1963 and 1964, but only three copies of the paper were requested (this being an era predating photocopiers, hence the author had to be contacted for paper copies), which was “very disappointing”, as Cormack reminisced over thirty years later. Hounsfield had not heard of Cormack’s mostly theoretical work on CT scanning when he began his experimental work in England in the late 1960s, using a computer to calculate image reconstructions. He patented the CT for medical applications in 1968, and by 1971 the first scan of a patient was performed, quickly leading to widespread clinical use (“and they went wild about it...”, as Cormack himself put it). Both Cormack and Hounsfield received the Nobel prize for Physiology or Medicine in 1979, and the award ceremony was the first time the two scientists had met.

The downside of CT imaging is that prolonged and repeated imaging may be damaging to the patient due to the withering effects of X-rays on DNA. MRI is an imaging technique that was developed in the early 1970’s, hence in parallel to CT imaging. The technique is based on NMR (nuclear magnetic resonance), and initially was known as NMRI, or nuclear magnetic resonance imaging, yet the first word, “nuclear”, was dropped, as it made patients uneasy. Nobel Laureate Douglas Osheroff presented the history of NMR and MRI during his lecture in Lindau in 2012:

(00:12:48 - 00:19:20)

What is the principle of MRI? The process is based on atomic nuclei, specifically protons, reacting to externally applied magnetic fields and radio waves. As the human body contains mostly water, and water molecules consist of two hydrogen atoms (each containing a single proton) and an oxygen atom, we can say the body is proton-dense. This abundance of protons is fundamental in MRI, as it is the protons that react to the external magnetic field, and the higher the concentration of protons, the better the image contrast. Nuclei, or in the case of hydrogen, protons, possess a particular property known as spin. The application of an external magnetic field causes most of them to become aligned to the field and some to become aligned in the opposite direction. This tiny surplus of aligned spins is known as net magnetisation, and this is the source of an MR signal. As an electromagnetic coil is moved towards the object, radio frequency pulses induce the excitation of the spins. The discontinued radio frequency signal causes the spins to again become aligned to the magnetic field, which produces a relaxation signal. This signal is dependent on various physical characteristics, such as density and water content, and this is why MRI is best used to visualise soft tissues in the body.

Paul Lauterbur and Peter Mansfield were awarded the Nobel Prize in Physiology or Medicine in 2003 “for their discoveries concerning magnetic resonance imaging”. Both scientists attended the Lindau meetings only once, in 2005, however, Allan Cormack provided a good explanation of the physics of MR imaging in his 1984 lecture at Lindau, despite the fact that we can’t see the “bit of hand-waving” he refers to by way of explanation:

(00:13:53 - 00:18:16)

When Lauterbur and Mansfield won the Nobel Prize, nearly 20 years after Cormack’s lecture, it was the culmination of many years of often frustrating research. Lauterbur’s now-famous paper containing noisy MR images of two test tubes, one filled with heavy water and one with ordinary water, was rejected by Nature in 1971. With time, the new imaging method began to gather interest. Mansfield and his student Andrew Maudsley produced the first image of a human body part, the cross-section of Maudsley’s finger, in 1977. Today, the use of MRI is extremely common and steadily growing; in Europe, depending on the country, 1000 to upwards of 11000 scans were performed per 100 000 inhabitants in 2013 alone. Not only is the technology of scanners being developed – scanners are beginning to resemble arm chairs rather than tunnels – but the principles of MRI are also changing. Currently, scientists are conjoining optical pumping (for which Roy Glauber, John Hall and Theodor Hänsch received a Nobel Prize in Physics in 2005) and NMR to obtain images of lungs. As lungs are filled with air, not water, it is impossible to image them using MRI, yet having a patient inhale polarised gas provides sufficient imaging contrast. Claude Cohen-Tannoudji briefly explained the method in 2015:

(00:04:39 - 00:05:54)

It should be noted that CT and MRI are used in scientific fields other than medical imaging; the use of MRI to study oil migration in rocks or the use of CT scans in forensic medicine or to image fossils are just some examples. Developments in physics have made it possible for scientists to better see what is taking place inside an object without cutting it open. In clinical diagnosis, CT and MRI reveal various hidden diseases, enabling subsequent treatment. Yet in order to better understand the progression of these diseases, it is necessary to rescale the investigations to the cellular, or sub-cellular, level. George Orwell wrote, “To see what is in front of one’s nose needs a constant struggle” and nowhere is this more apparent than in microscopy. The problems of this rapidly developing field has directed many physicists towards biology.

“Thousands of Living Creatures in One Small Drop of Water” – Developments in Microscopy

The 17th century scientist and philosopher Robert Hooke was the first person to see and name plant cells, or “little boxes”, as he initially described them, in a slice of cork. However, his elaborate, custom-made microscope with gold etchings was not as good as the simple lens of his contemporary, Antoni van Leeuwenhoek, which provided a much larger magnification. Suddenly, the scientists of the day became aware that insects’ legs were covered in hairs, plants were made of smaller components, and a drop of supposedly clean water was home to swarms of tiny animals. The invisible had to be reckoned with.

In modern times, an enormous leap in the development of microscopy came in 1933, when Ernst Ruska and Max Knoll invented the electron microscope. As Stefan Hell, Nobel Laureate in Chemistry in 2014 (alongside Eric Betzig and William E. Moerner), explained in his lecture in 2016, resolution, or the ability to tell one object apart from the other under the microscope lens, was presumed to be limited to a ¼ wavelength of light (or 200 nm) in optical microscopy. This is known as the Abbe limit, after the German physicist Ernst Abbe. Stefan Hell describes this theory in his lecture, given at Lindau in 2016:

(00:03:55 - 00:06:23)

Ruska demonstrated that by using a stream of electrons instead of light, he would be able to surpass this diffraction limit and observe finer objects than optical microscopy would allow. Ruska was unacquainted with de Broglie’s 1925 thesis on the wave theory of electrons. As Ruska himself remembered in his Nobel lecture, “ (...) I was very much disappointed that now even at the electron microscope the resolution should be limited again by a wavelength (of the "Materiestrahlung"). I was immediately heartened, though, when with the aid of the de Broglie equation I became satisfied that these waves must be around five orders of magnitude shorter in length than light waves. Thus, there was no reason to abandon the aim of electron microscopy surpassing the resolution of light microscopy.” The electron microscope made visualising single molecules or even atoms possible, and, to quote Ruska, “many scientific disciplines today can reap its benefits”. Ruska won the Nobel prize in 1986, with Heinrich Rohrer and Gerd Binnig. He was going to attend the 38th Lindau Nobel Laureate Meeting in Physics in 1988, but sadly passed away one month before the meeting.

What is interesting to note is that, while it became possible to see particular cells and even protein structures, before the advance of computers, there weren’t any possibilities to immortalise the microworld under the lens. Biologists and materials scientists were aided by artists, who were able to colourfully convey the complex architecture to others using sketches and paintings. Kurt Wüthrich pays a tribute to one such artist, Irving Geis, in his lecture in 2016:

(00:16:11 - 00:16:51)

The drawbacks of electron microscopy are well known and often lamented over, particularly by biologists. Biological samples, even with good preparation, have low contrast in the resulting images, which means they must be often treated with metals to increase contrast. Moreover, the samples generally become dehydrated or deformed by freeze-drying, hence by peering into the microscope we are effectively looking at a sample that was.

“ (...) the dream really was to be able to have an optical microscope that could look at living cells with the resolution of an electron microscope (...)”, explained Eric Betzig during his lecture, “Working Where Others Aren’t”, in Lindau in 2015.

Over time, optical microscopy began to improve, and an important step in its development was the use of fluorescent stains in biological samples. A laser beam passes onto the stained material, in the process exciting the fluorescently stained molecule, which then emits at a longer wavelength. What emerges is a detailed map of fluorescently-labelled elements, which can then be processed by visualisation software. Also, the progress in image processing greatly increased the quality of visualisation. Yet in order to achieve better resolution, it was clear that, as in electron microscopy, the diffraction limit had to be beaten. Eric Betzig expressed it very well when he asked the question, “What can we do as physicists to make better microscopes for biologists?” The Near-Field Scanning Optical Microscope (NSOM), perfected in the early 1990s, demonstrated resolution at the nanometre scale, but as Stefan Hell expressed in his 2016 lecture, “ (...) I felt, does this look like a light microscope? Of course not! This looks like a scanning force microscope. I wanted to break the diffraction barrier for a light microscope, that looks like a light microscope and operates like a light microscope. So something that no one anticipates”. Hell’s work eventually led to stimulated emission depletion microscopy, or STED, a scanning microscopy technique where the fluorescence of the molecules is turned on and off to increase resolution, “playing with molecular states”, as Hell described. A laser beam that is smaller than the diffraction limit is directed at fluorescent molecules. The molecule’s electrons reach the excited state, and just before the spontaneous emission of photons, a second laser beam is guided onto the fluorescent molecules, pushing the photons into the red shift, depleting the fluorophore’s emission outside the centre of the beam by stimulated emission, effectively “keeping the molecules dark”. The molecules in the centre of the depletion beam fluoresce unhindered, and this is the area that is being imaged (Hell uses a diagram with a doughnut-shaped beam, where the centre of the doughnut is the imaged area).

In their 2015 lectures, Hell’s co-recipients of the Nobel prize, William Moerner and Eric Betzig gave very good explanations on what is super-resolution (for single molecules) and why super-resolution, respectively:

(00:23:44 - 00:26:53)

(00:17:45 - 00:19:17)

The field of optical microscopy is constantly changing, with improvements in both technology and software. In this lecture, Steven Chu, another physicist with interests in the realm of life sciences, defines his “Christmas wish list” for optical microscopy:

(00:12:00 - 00:13:21)

Fortunately, for most Nobel Prize Laureates, obtaining a Nobel Prize is a notable highlight, but does not mark the culmination point of their research careers. Soon after receiving the Prize for photoactivation localization microscopy, or PALM, Betzig achieved yet another innovation in microscopy by introducing lattice light-sheet microscopy:

(00:25:46 - 00:27:10)

In their lectures, Betzig and Moerner also described a new method, known as PAINT, where fluorophores are added to the sample while imaging, and attach to certain areas of the cell or molecule, which can be focused on during its short-lived fluorescence, and detected by a camera.

At the moment, none of these super-resolution methods can be applied universally to every sample, but scientists are continually advancing these methods, and their combination, enabling real-time, fast, live-cell imaging, may soon become more than just a concept. “What I really believe at this point is that we are on a cusp of a revolution in cell biology, because we still have not studied cells as cells really are. We need to study the cell on its own terms”, said Betzig, concluding his lecture.

Obtaining a better image may be a goal in itself and microscopy researchers must derive a great deal of satisfaction when they compare sharp images of cell organelles compared with previous fuzzy ones. The fascination is equal to that of the 16th century microscopists, peering into handmade lenses. But the essential message of these new technologies is that knowledge is power; the more we observe, the more we learn about biological mechanisms, which can only advance treatment of many illnesses that still afflict us.

The Future

In the next few years, the imaging techniques described in this topic cluster will become more sophisticated, through progress in resolution and instrument precision, but also as a result of new discoveries based on basic science. Whether the initial finding seems to be good for “damn little”, as Felix Bloch supposedly said of NMR in the 1950’s, or a defined solution is actively pursued by applying physical processes, as in the development of STED microscopy, it is evident that theoretical physics is the groundwork for exciting applications in various fields. We have evidence in the form of incredible images to show for it.

Notes:

http://ec.europa.eu/eurostat/statistics-explained/index.php?title=Healthcare_resource_statistics_-_technical_resources_and_medical_technology&oldid=280129

http://jwst.nasa.gov/comparison_about.html

Andersen, G. (2006). The Telescope: Its History, Technology and Future. Princeton University Press, Princeton, New Jersey, USA.

Cierniak, R. (2011). X-ray Computed Tomography in Biomedical Imaging. Springer New York.

Dawson, M.J. (2013) Paul Lauterbur and the Invention of the MRI. Massachusetts Institute of Technology.

Edwards, S.A. (2006). The Nanotech Pioneers: Where are They Taking Us? Wiley-Verlag GmbH & Co KGaA, Weinheim.

Hudelist, M.A., Schoeffmann, K., Ahlström, D., and Lux, M. (2015). How Many, What and Why? Visual Media Statistics on Smartphones and Tablets. Workshop Proceedings of ICME 2015, June 29 - 3 July 2015, Torino, Italy.

Lang, K.R. (2006). Parting the Cosmic Veil. Springer.

Marett-Crosby, M. (2013). Twenty-five Astronomical Observations that Changed the World: And How to Make Them Yourself. Springer.

Mikla, V.I., Mikla, V.V. (2014). Medical Imaging Technology. Elsevier.

Ruska, E. (1986). The Development of the Electron Microscope and of Electron Microscopy. Nobel lecture.

Snyder, L.J. (2015). Eye of the Beholder. Johannes Vermeer, Antoni van Leeuwenhoek and the Reinvention of Seeing. W.W. Norton & Company.

Stephenson, F.R., Green, D.A. (2003). Was the supernova of AD1054 reported in European history? Journal of Astronomical History and Heritage 6(1), 46-52.

Thorley, J.A., Pike, J., Rappaport, J.Z. (2014). Super-resolution Microscopy: A Comparison of Commercially Available Options. In: Fluorescence Microscopy. Super-resolution and Other Techniques, Chapter 14. Cornea, A., Conn, P.M. (eds.), Elsevier.